Last year Steve Freeman published a blog article describing how the use of dependency injection could lead to coupling between objects that should have no knowledge of each other. The essential idea is that using dependency injection uniformly for all relationships between objects within the program makes it harder to understand and control the higher level structure of the program; you turn your program into an unstructured bag of objects where every object can potentially talk to every other object.

I thought that I would write a bit about my approach to dealing with this problem in Java programs: how I try to add higher level structure to the code; and how I use the tools to enforce this structure. I am going to take a long diversion through the practices I use to organise Java code before I talk about Dependency Injection. If you want to only read that bit, then skip to the end.

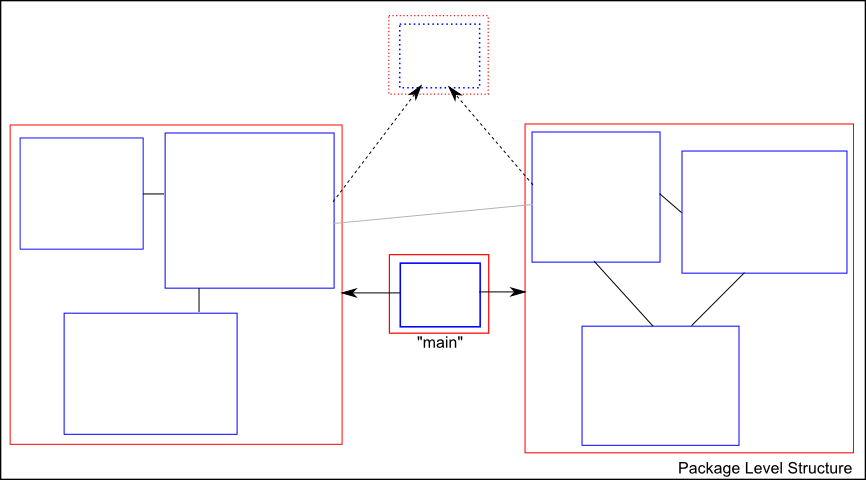

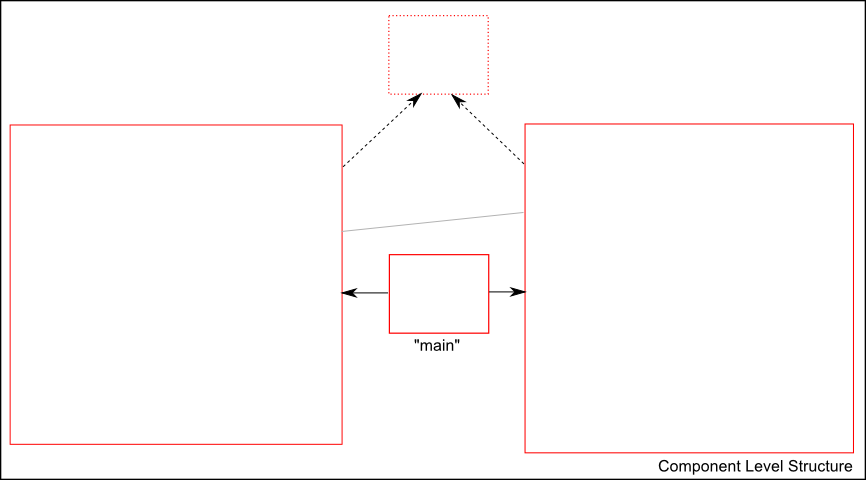

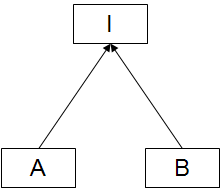

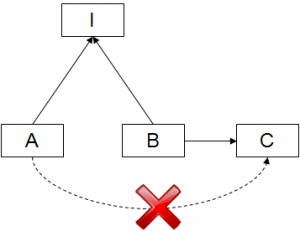

The following are my versions of the diagrams from a post about the problem with DI frameworks by David Peterson. I start with the same unstructured graph of objects and use the same basic progression in order to describe how I use the Java programming language to resolve these issues without having to sacrifice the use of my DI framework (should I want to use one).

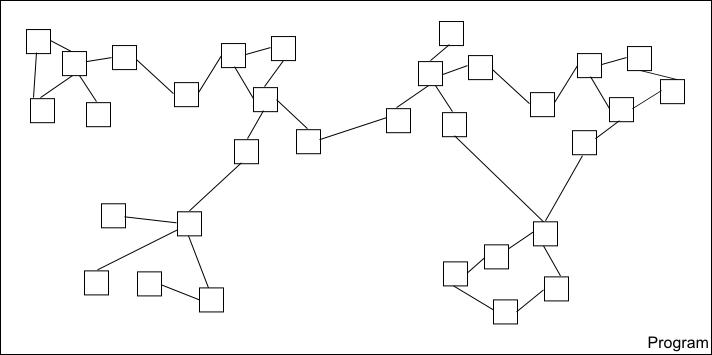

For the sake of argument, the program begins as an unstructured graph of objects dumped into a single package within a single component (or project). The objects are all compiled together in one instantiation of the Java compiler. This program is likely to be quite difficult to understand and modify – not least, where do you even start trying to understand it? We can do better.

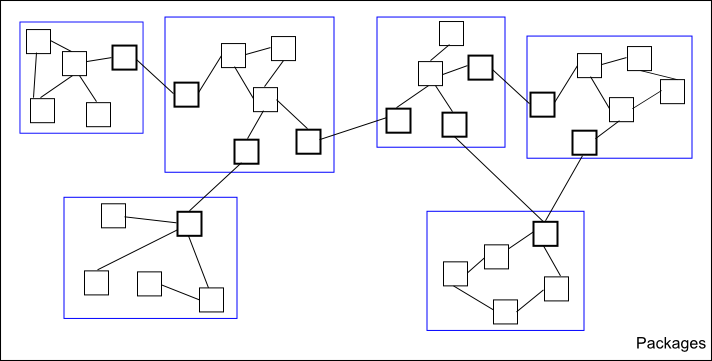

Types, Packages and the Classpath are the ways that the Java programming language offers compiler support for organising code into higher level abstractions. The benefit of using packages is that the compiler type system will enforce the abstraction boundary. If a type definition is marked as package visible then no code outside the package with a reference to such a type (class or interface name) will compile.

I refactor the code by identifying a related sets of classes and moving them into a package. Without breaking the program I then mark every type that I can as package visible. Now I can see some of the structure of my code: I can see which classes are implementation details of a particular abstraction; and I can see what protocol each of my abstractions provides by looking at its public classes. I cannot accidentally depend on an implementation detail of my abstraction because the Java compiler will prevent me (provided I have defined sufficient types and don’t do too much reflection).

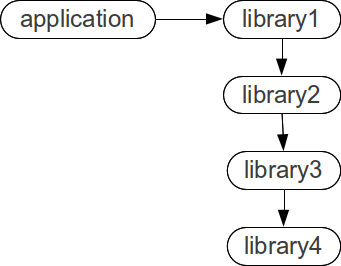

There might be a lot of packages involved in a realistic program so I would like another level of abstraction that I can use to organise my packages into groups of related packages. I do this by organising my packages into libraries. All of the packages in my library will be compiled together in one single instantiation of the compiler, but each of my libraries will be compiled separately. I want to detect any accidental dependencies between my libraries so I will only put the minimum necessary libraries onto the classpath of each invocation of the compiler.

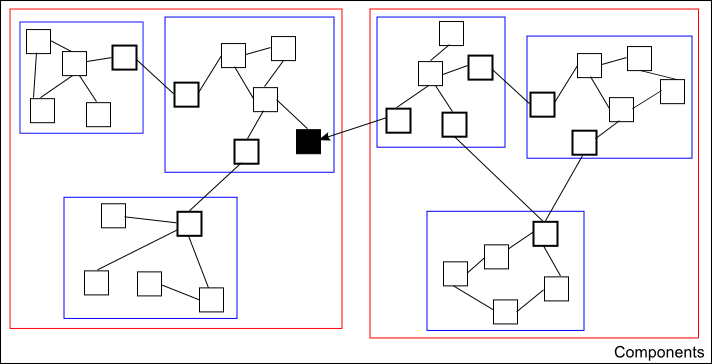

I can now see the high level component organisation of my program and I can see which components are used by a given component. I may even be able to see which component is the entry point of my program – the entry point component will transitively depend on all the other components. There are still two further properties I would like my code to have: I do not want to accidentally create a dependency between a package in one library and a package in another library (its public protocol should be clearly defined); and I would like the entry point of the application to be immediately obvious (so a code reader can start reading with no delay).

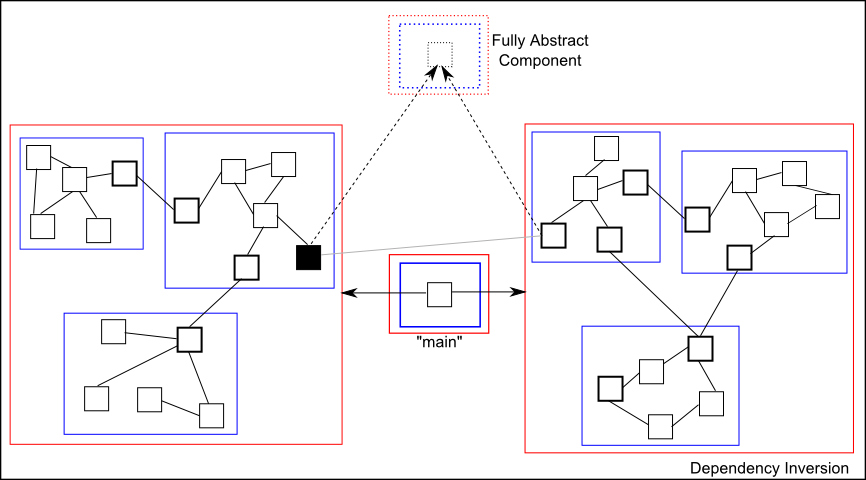

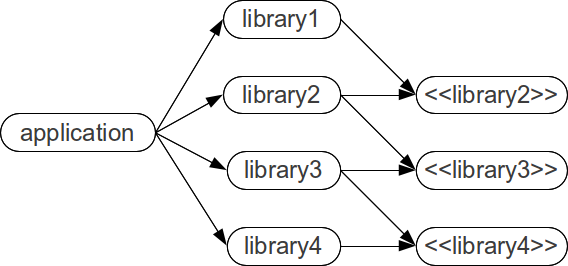

I extract the public interface of my library into a new library, and change the users of that library to depend only on the public interface. I also replace the implementation jar with the new API-only jar on the compiler classpath anywhere it occurs in my other libraries. This is a form of dependency inversion. The new interface library cleanly describes the protocol provided by the implementation and the separate compilation ensures that users of the library only depend on the public protocol.

I then extract the entry point (the highest level wiring) of the program into its own component. This component contains the minimum code necessary to instantiate the top level components, wire them together and start the execution. This is a form of inversion of control.

The structure of my program is now not only represented in the organisation of the code, but the structure is also enforced by the Java compiler.

I can looking at the package structure of the code.

Or I can look at the component structure of the code.

What structure am I now able to obtain from the code?

- Package API – by looking at the visibility of my classes

- Package Relationships – by looking at the packages in each library

- Library API – by looking at the fully abstract library component

- Library Relationships – by looking at the classpath of each library

- Program high-level organisation – by looking at the classpath of each library and the entry-point wiring

Dependency Injection and Dependency Injection Frameworks

So why haven’t I mentioned Dependency Injection and Dependency Injection frameworks? And how does all this relate to the “Facebook Dependency Injection” problem?

I can now choose to use dependency injection as much or as little as I want/need to. If I want to inject one library into another, or inject one package into another then I can just provide an appropriate factory. If I want to use new, I can do that too. The use of package or public visibility will distinguish between the peer objects and the internal implementation details. The Java compiler will prevent me from creating any unwanted dependencies by enforcing package protection. The same argument applies to dependencies between libraries: I can distinguish the public API of the library by splitting it out; by only having the public API on the classpath I can be sure that I will not accidentally use an implementation detail of a dependency.

The type system and compiler will help me prove to myself that the program will only instantiate an object graph with the structure that I intended.

I am now free to use a Dependency Injection framework anywhere within my code that I feel it is appropriate. I can use a Dependency Injection framework to wire up the implementation classes of a package, I can use it to wire up the packages of a library or I can use it to wire together my libraries – or any combination I choose. I can even use a different Dependency Injection framework within each of my libraries or even within each of my packages if for some reason I need to do that! I can be sure the structure of my program doesn’t just become a bag of objects because the compiler enforces it for me – I cannot just pull in an object of any type into my object, I can only pull in an object that has a type that is in the the public API of a library (and package) that is on my classpath.