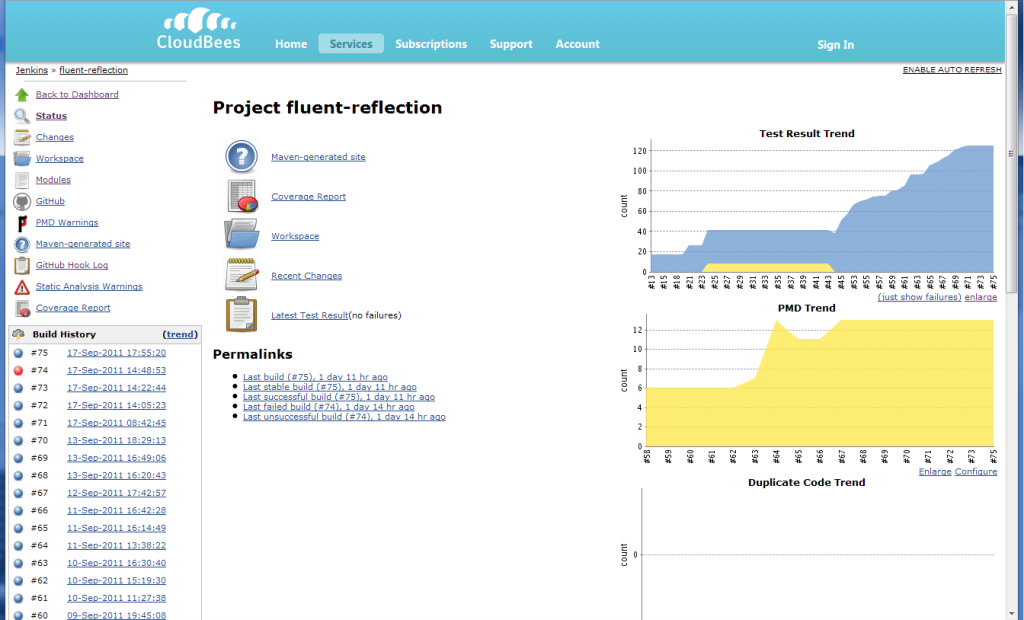

When following an iterative development methodology compilation time is a concern. In order to safely make a rapid series of small transformations to a program you need to regularly recompile and test the changes you are making. Using an IDE which can do incremental compilation is very helpful, but more complex programs with code and resource generation phases, when using languages without that kind of compilation support, and when verifying that the CI build will work, still require a fast full automated build and test cycle.

In a previous article I discussed a design principal that can be used to try to minimise the amount of time required to do a full compile of all the code in an application.

It is also possible to select design principals which will reduce the amount of recompilation required by any given code change. Conveniently it turns out that same abstract layering approach can be used here too.

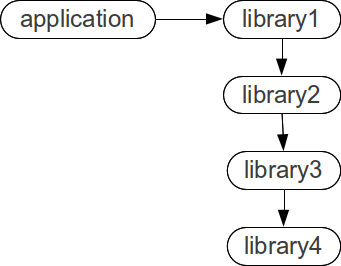

We return to the example simple application;

How long is required to compile if a change is made in each component, if each component takes “m” time to compile?

| Component | Compilation Order | Compilation Time |

|---|---|---|

| library4 | library4,library3,library2,library1,application | 5m |

| library3 | library3,library2,library1,application | 4m |

| library2 | library2,library1,application | 3m |

| library1 | library1,application | 2m |

| application | application | 1m |

If we calculate the instability of each module taking into account transitive dependencies. Abusing the definitions slightly we get:

instability = (Ce/(Ca+Ce))

| Component | Ce | Ca | I |

|---|---|---|---|

| library4 | 0 | 4 | 0 |

| library3 | 1 | 3 | 0.25 |

| library2 | 2 | 2 | 0.5 |

| library1 | 3 | 1 | 0.75 |

| application | 4 | 0 | 1 |

Comparing the instability with the compilation time is interesting. Less stable packages should have lower compilation times, which we see here:

| Component | Time | I |

|---|---|---|

| library4 | 5m | 0 |

| library3 | 4m | 0.25 |

| library2 | 3m | 0.5 |

| library1 | 2m | 0.75 |

| application | 1m | 1 |

Reducing Recompilation Time

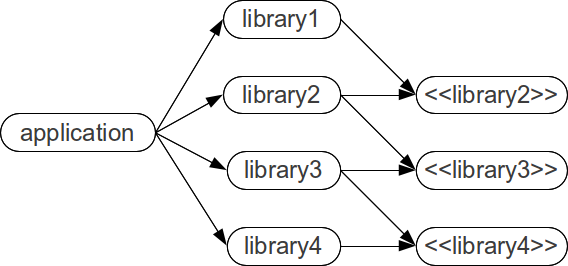

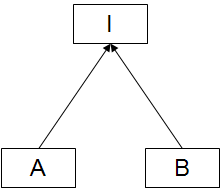

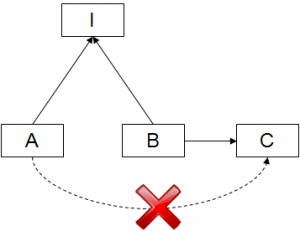

We can restructure our application using the dependency inversion principal. We split each layer into an abstract (interface) layer and a concrete implementation layer.

The application module becomes responsible for selecting the concrete implementation of each layer and configuring the layers of the application. This design pattern is known as Inversion of Control.

Taking into account the ability of a multi-core machine to compile modules in parallel, we get the following minimum compilation times:

| Component Changed | Compilation Order | Compilation Time |

|---|---|---|

| library4 | library4,application | 2m |

| <<library4>> | <<library4>>,{library4,library3},application | 3m |

| library3 | library3,application | 2m |

| <<library3>> | <<library3>>,{library3,library2},application | 3m |

| library2 | library2,application | 2m |

| <<library2>> | <<library2>>,{library2,library1},application | 3m |

| library1 | library1,application | 2m |

| application | application | 1m |

The compilation time for the abstract modules is uniformly 3m, and the compilation time for the concrete modules is uniformly 2m. The application itself is always the last to be compiled so is 1m as before.

How has the design change affected the stability of the modules?

| Component | Ce | Ca | I |

|---|---|---|---|

| library4 | 1 | 1 | 0.5 |

| <<library4>> | 0 | 3 | 0 |

| library3 | 2 | 1 | 0.66 |

| <<library3>> | 0 | 3 | 0 |

| library2 | 2 | 1 | 0.66 |

| <<library2>> | 0 | 3 | 0 |

| library1 | 1 | 1 | 0.5 |

| application | 4 | 0 | 1 |

Again we see that the less stable a module is the lower its compilation time:

| Component | Time | I |

|---|---|---|

| library4 | 2m | 0.5 |

| <<library4>> | 4m | 0 |

| library3 | 2m | 0.66 |

| <<library3>> | 4m | 0 |

| library2 | 2m | 0.66 |

| <<library2>> | 4m | 0 |

| library1 | 2m | 0.5 |

| application | 1m | 1 |

This example is quite simple, so we see the instability metric of the modules being dominated by the application. It does illustrate however how we can use dependency inversion to help establish an upper bound on the recompilation time for any given change in a concrete module. I will examine a more realistic example in a future article.